LLM Reasoning and Self-Improvement: The Path to Smarter AI

This series of blogs is based on learnings and insights from the Advanced LLM Agents MOOC. Inference time techniques which is a topic of the previous lecture is addressed in this writeup

Large Language Models (LLMs) have made tremendous strides in recent years, demonstrating impressive abilities in natural language understanding and generation. However, their reasoning capabilities, especially when faced with complex or nuanced tasks, still lag behind human-level performance. The introduction of reasoning systems, such as System 1 and System 2, along with advanced self-improvement mechanisms like self-rewarding models, meta-rewarding techniques, and EvalPlanner, marks a significant step forward in AI capabilities.

This write-up explores these advancements, discussing their potential to create more autonomous and intelligent LLMs while addressing the challenges that come with self-improvement.

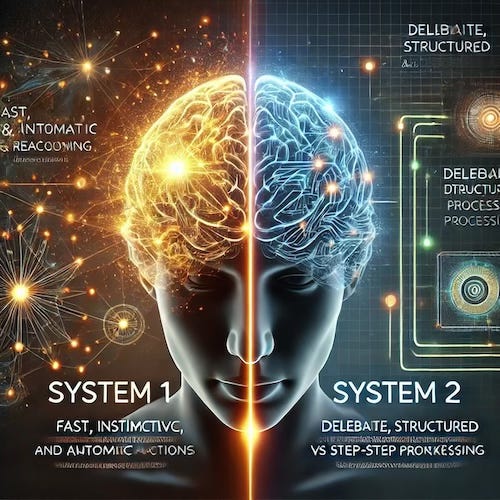

System 1 vs. System 2 Reasoning in LLMs

In the context of cognitive psychology, System 1 and System 2 represent two distinct modes of thinking.

System 1 Reasoning: This mode of reasoning is fast, intuitive, and relies on heuristics and quick associations. It operates within the neural network itself, directly outputting answers with fixed computation per token. While efficient, System 1 is prone to errors such as hallucinations and biases due to its reliance on pattern recognition rather than deep reasoning.

System 2 Reasoning: In contrast, System 2 is slower but more deliberate and accurate. It involves generating a chain of thought before arriving at a final response, allowing for planning, verification, and logical deduction. This method helps mitigate the shortcomings of System 1 by enhancing accuracy and reliability.

While LLMs can mimic System 1 thinking to a certain extent, demonstrating impressive fluency and quick responses, true reasoning requires the engagement of System 2 processes. By integrating System 2 techniques like chain-of-thought prompting, verification mechanisms, and iterative refinement, LLMs can improve their reasoning capabilities and reduce errors. I have covered Chain-of-Thought in previous blog, here I will focus on verification mechanisms and iterative refinement

Reinforcement Learning from Human Feedback (RLHF)

Reinforcement Learning from Human Feedback (RLHF) has been a key driver in aligning LLMs with human preferences. It involves using human-labeled reward models to guide model training. This involves training LLMs to generate responses that align with human preferences and expectations. By providing feedback on the quality and relevance of LLM outputs, human trainers guide the model towards more accurate and desirable responses.

Benefits of RLHF:

Aligns models with human values and expectations.

Enhances response quality in various tasks such as summarization, translation, and content moderation.

Helps mitigate biases and hallucinations through curated feedback loops.

Limitations of RLHF:

Scalability Issues: Human feedback is labor-intensive and costly.

Inconsistent Judgments: Different human annotators may have subjective disagreements, leading to inconsistencies.

Dependence on Human Expertise: Some tasks require expert feedback, making RLHF less practical for specialized domains.

To address these limitations, researchers are exploring self-rewarding mechanisms that allow LLMs to improve without human intervention.

Self-Improvement Through Self-Rewarding Models

Self-rewarding models represent a breakthrough in AI self-improvement. Instead of relying on human-labeled feedback, these models generate multiple responses, evaluate them internally, and adjust their parameters using reinforcement learning.

Key Capabilities of Self-Rewarding LLMs:

Task Creation: The model generates new training tasks autonomously.

Self-Evaluation: It assesses its own responses using predefined reward functions.

Iterative Training: The model refines itself through repeated cycles of self-assessment and updating.

The Training Process for Self-Rewarding Models:

Generation of Multiple Responses: The model produces several candidate responses for a given prompt.

Evaluation and Reward Assignment: A reward model, trained on human-like preferences, assigns scores to each response.

Reinforcement Learning Optimization: Using reinforcement learning techniques, such as Direct Preference Optimization (DPO), the model updates its weights to favor higher-scoring responses.

Meta-Rewarding Integration: The model assesses the effectiveness of its own reward judgments and refines the criteria used for evaluation.

Continuous Feedback Loop: The iterative nature of this process allows the model to learn from its own evaluations, improving its response quality over multiple training cycles.

By enabling models to assess and refine their own outputs, self-rewarding approaches reduce dependence on human annotators and facilitate continuous learning.

Iterative Reasoning Preference Optimization

Iterative Reasoning Preference Optimization (Iterative RPO) is a technique aimed at enhancing LLM reasoning capabilities by iteratively refining Chain-of-Thought (CoT) reasoning.

How Iterative RPO Works:

Generation of Reasoning Chains: The model generates multiple chains of thought for a given prompt.

Preference-Based Selection: A preference model identifies the best reasoning chain based on correctness and coherence.

Optimization via DPO: Using Direct Preference Optimization, the model is trained to prefer the superior reasoning chains while discarding weaker ones.

Iterative Refinement: The process is repeated across multiple training cycles, progressively improving the model’s reasoning skills.

Benefits of Iterative RPO:

Improves Logical Consistency: By refining reasoning chains, the model produces more structured and logically sound responses.

Reduces Hallucinations: Filtering out weak reasoning chains minimizes the likelihood of generating false or misleading information.

Enhances Problem-Solving Ability: Particularly effective for mathematical and complex reasoning tasks, Iterative RPO helps LLMs develop more reliable solutions.

By incorporating Iterative RPO, LLMs can significantly enhance their reasoning abilities, ensuring that outputs are more accurate, structured, and trustworthy.

Meta-Rewarding: A Higher Order of Self-Improvement

Meta-rewarding enhances self-improvement by enabling LLMs to refine their own evaluation mechanisms. It introduces an additional layer of judgment and optimization beyond basic self-rewarding models.

How Meta-Rewarding Works:

Multi-Level Evaluation: The LLM assumes three roles:

Actor: Generates responses based on given prompts.

Judge: Evaluates the quality of responses.

Meta-Judge: Assesses the validity of the judge’s decisions, refining the evaluation process.

Adaptive Feedback Loops: The model continuously refines its judgment criteria based on performance analysis, improving the accuracy of future evaluations.

Data-Driven Improvement: The LLM updates its internal reward functions by learning from past judgments, gradually enhancing decision-making consistency.

Benefits of Meta-Rewarding:

Enhanced Decision-Making: By evaluating its own judgment process, the model progressively refines its ability to assess outputs accurately.

Improved Generalization: Helps models develop more reliable evaluation metrics that apply across various tasks.

Reduced Dependency on Human Oversight: As the model improves its judgment capabilities, the need for manual intervention decreases, making self-improvement more autonomous.

Meta-rewarding ensures that LLMs not only optimize their immediate responses but also enhance their ability to judge and refine reasoning over time.

EvalPlanner: Enhancing Evaluation Capabilities

EvalPlanner is an advanced framework that improves LLM evaluation by applying structured reasoning and verifiable data creation. It follows a systematic approach:

Generating Evaluation Plans: The model creates an outline for assessing different responses.

Executing Evaluations: It applies structured judgment processes to analyze outputs.

Generating Verifiable Data: It produces synthetic evaluation data, enabling a more objective assessment of responses.

By incorporating EvalPlanner, LLMs gain the ability to systematically evaluate their own reasoning and ensure higher accuracy in their outputs.

Conclusion

The development of self-improving LLMs represents a major leap in AI evolution. By integrating System 2 reasoning, self-rewarding mechanisms, meta-rewarding processes, Iterative RPO, and evaluation frameworks like EvalPlanner, researchers are paving the way for more autonomous and intelligent AI systems.