Boosting Brain-Inspired Memory for LLMs: HippoRAG and the Quest for Reasoning

This blog is based on learnings and insights from Lecture #3 of the Advanced LLM Agents MOOC. The earlier blog based on the previous lecture can be found here

Despite their impressive capabilities, Large Language Models (LLMs) still struggle with memory retention, reasoning, and the ability to synthesize complex information across different sources. Unlike the human brain, which effectively integrates and retrieves knowledge, LLMs face limitations in long-term memory and contextual reasoning.

HippoRAG, a novel Retrieval-Augmented Generation (RAG) framework inspired by the hippocampal indexing theory of human memory. This innovation enhances LLMs' ability to retrieve, associate, and apply knowledge, significantly improving their reasoning capabilities. Alongside this, the concept of grokking, where models achieve generalization only after extensive training, provides valuable insights into how LLMs develop reasoning skills.

Lets look at how HippoRAG strengthens LLM memory, its advantages in multi-hop question answering, and the broader implications of grokking in AI reasoning.

The Power of Human-Inspired Memory: HippoRAG

Understanding Hippocampal Memory Indexing Theory

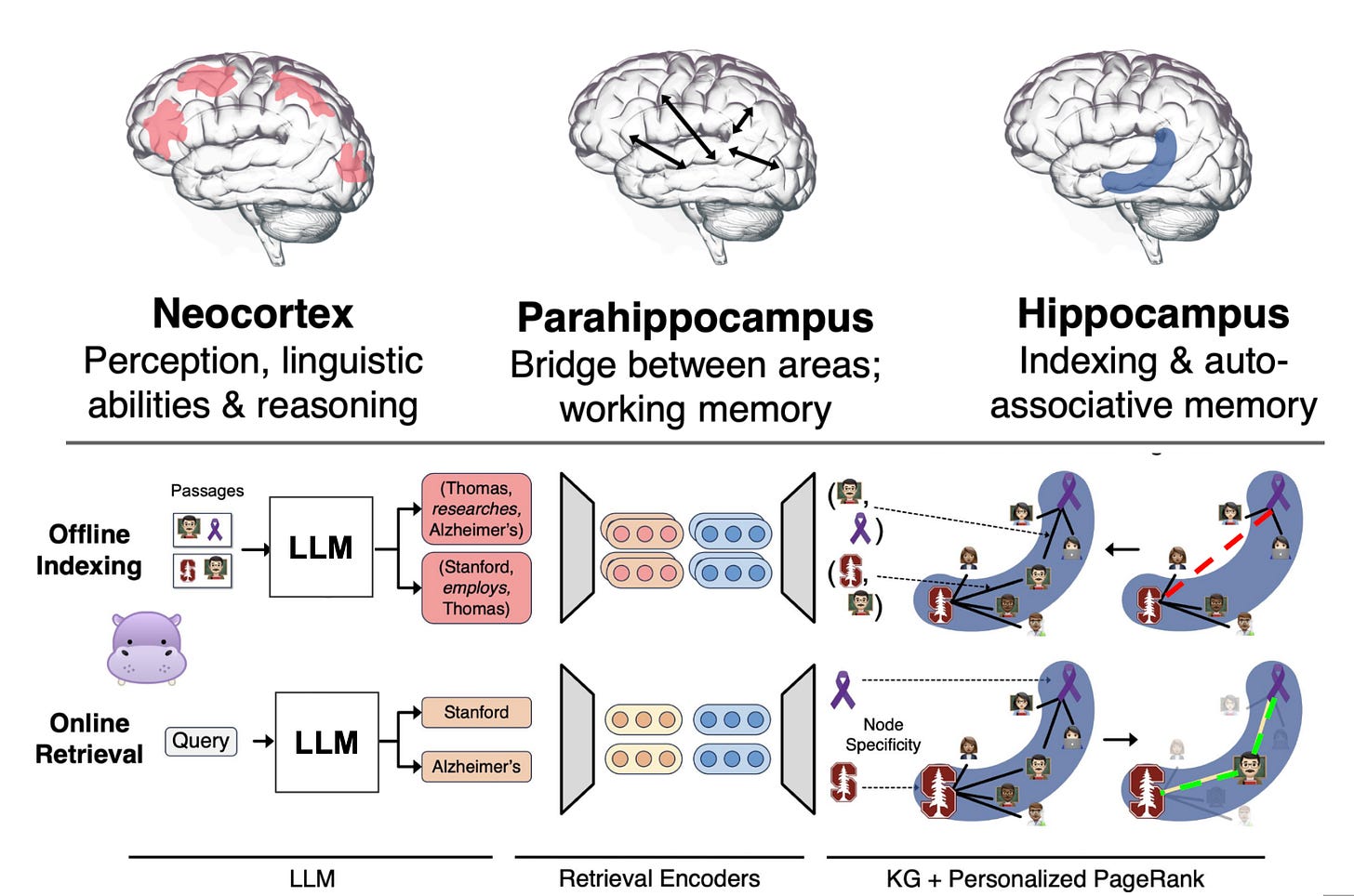

Human memory relies on three critical components:

Neocortex – Responsible for processing raw experiences and extracting high-level patterns.

Hippocampus – Acts as an index, connecting disparate memory fragments for retrieval.

Parahippocampal Regions – Facilitates communication between the hippocampus and neocortex, supporting memory recall.

This system enables pattern separation (storing similar memories distinctly) and pattern completion (retrieving full memories from partial cues), key attributes that current LLMs lack.

Limitations of LLM and RAG

Large language models (LLMs), face the issue of catastrophic forgetting, where learning new information can unintentionally alter previously learned information. Instead of directly updating the model's parameters with new experiences, non-parametric memory stores new experiences externally to the model. The defacto solution for providing LLMs with long-term memory is RAG.

RAG systems which are based on embedding have limitations compared to human memory. Human memory can recognize patterns in massive data, create associations, and dynamically retrieve information based on the current context. The current RAG systems don't make associations across different pieces of information as effectively as human memory. RAG methods struggle to integrate new knowledge across passage boundaries because each new passage is encoded in isolation. This is problematic for tasks requiring knowledge integration across passages or documents, such as scientific literature review, legal case briefing, and medical diagnosis.

HippoRAG aims to bridge this gap.

How HippoRAG Mimics Human Memory

HippoRAG employs a two-step process to enhance LLM memory:

Offline Indexing (Memory Encoding): HippoRAG structures knowledge using a schemaless knowledge graph (KG), mirroring how the hippocampus organizes information. This graph captures relationships between concepts, allowing better recall.

Online Retrieval (Memory Recall): When faced with a query, HippoRAG identifies relevant concepts, applies Personalized PageRank (PPR) to retrieve associated knowledge, and ranks passages for relevance, mimicking how humans recall related memories.

HippoRAG in Action: Multi-Hop Question Answering

A key strength of HippoRAG is its performance in multi-hop question answering, where reasoning over multiple knowledge sources is required. Traditional RAG models often struggle with such queries, failing to retrieve the right context in a single step.

Real-World Applications

HippoRAG excels in path-finding questions, where multiple pieces of information must be connected across different domains. For example:

Medical Research: Identifying relationships between drugs and diseases based on published studies.

Literature & Cinema: Linking characters, themes, and historical influences in storytelling.

Legal Analysis: Tracing case law precedents across multiple jurisdictions.

By leveraging single-step multi-hop retrieval, HippoRAG outperforms traditional iterative methods, making reasoning more efficient and accurate.

Reasoning and Grokking: Unlocking Implicit Learning

LLMs struggle with implicit reasoning, which involves inducing structured and compressed representations of facts and rules. While explicit verbalizations of reasoning steps (e.g., chain-of-thought rationales) can improve task performance, they are not available during large-scale pre-training, where the model's core capabilities are acquired

What is Grokking?

Grokking is a phenomenon observed in transformers where the model achieves high training accuracy quickly (overfitting), but generalization to unseen data only occurs after an extended period of training far beyond the point of overfitting. This delayed generalization is what's referred to as "grokking". This aligns with the way humans develop reasoning skills—initial memorization followed by deeper understanding

Grokking’s Role in AI Reasoning

It enables implicit reasoning, where models derive rules and patterns without explicit programming.

The transition from memorization to generalization depends on the ratio of inferred to atomic facts in training data.

This process helps LLMs move beyond rote memorization to true pattern recognition and inference

Understanding grokking can refine LLM training strategies, improving their ability to reason, plan, and adapt beyond rote memorization.

Current Limitations and scope for improvement

While HippoRAG is a promising step forward, it faces challenges:

Accuracy: HippoRAG primarily focuses on structured entity relationships, which may overlook broader contextual signals crucial for nuanced retrieval. Accuracy can be significantly improved by refining how the system ranks retrieved documents

Imperfect Open Information Extraction (OpenIE): The system depends on OpenIE techniques, which may introduce knowledge gaps, especially when dealing with longer and more complex documents. Improving natural language understanding capabilities will help in extracting more nuanced information. Machine learning techniques could be incorporated to enhance the ranking of retrieved knowledge

Scalability Concerns: The computational cost of running advanced graph traversal algorithms at scale remains an issue. Improving current embedding-based memory retrieval methods and hybrid models that combine knowledge graphs with deep learning techniques can provide even better performance

Conclusion

HippoRAG represents a significant leap in brain-inspired memory systems for LLMs, addressing key limitations in retrieval and reasoning. By emulating hippocampal memory functions, it enables better knowledge integration, efficiency, and adaptability.

At the same time, grokking highlights how LLMs learn to reason over time, emphasizing the need for extensive training and structured learning environments. Together, HippoRAG and grokking offer compelling insights into the evolution of AI reasoning, paving the way for more intelligent and autonomous language agents.

As AI research advances, combining biologically inspired memory models with refined learning strategies will be essential in developing LLMs that think, reason, and remember like humans.