The Role of Memory in LLMs and AI Agents

This is the second installment in our series exploring the foundational principles of AI agents. In the previous article, we dove into the crucial role of external Tools. Now, we're turning our attention to something critical, yet often confusing topic: memory.

Like many others, I've found that the varied terminology—short-term, long-term, entity, and contextual memory—can make it difficult to grasp the core concepts and differentiate between memory in LLMs and other AI agents. This article aims to solve this problem by providing a clear and concise explanation of AI memory, demonstrating its crucial role in enabling intelligent behavior

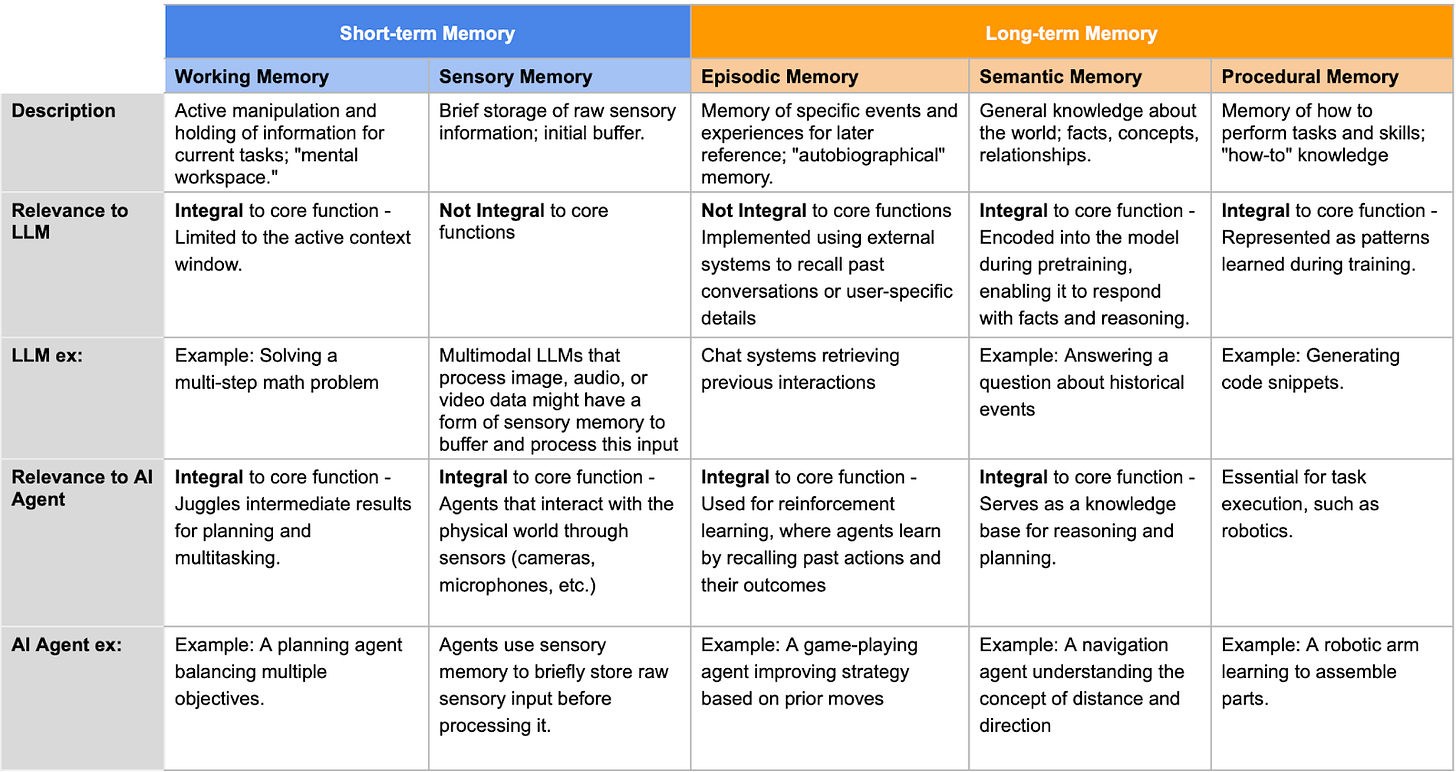

Here is the nutshell of my learnings and findings. The rest of this write-up is focussed on unpacking this information

The Importance of Memory in AI

Memory is a fundamental aspect of intelligence, both natural and artificial. In AI, memory allows systems to retain information, learn from past experiences, and make informed decisions based on context. While LLMs are specialized in natural language processing and generation, AI agents operate across broader tasks, interacting dynamically with environments. While both leverage memory concepts, their implementations and needs differ significantly.

Types of Memory

At a high-level, memory for AI agents can be classified into short-term and long-term memory. Short-term memory allows an agent to maintain state within a session while Long-term memory is the storage and retrieval of historical data over multiple sessions. Human memory is generally classified as semantic, episodic, procedural, working and sensory. The mapping of human memory and Agentic memory could look like this

Short-Term Memory (STM) in Humans ≈ Working Memory + Sensory Memory in AI Agents: Short-term memory in humans is the capacity to hold a small amount of information in mind in an active, readily available state for a short period. In AI agents, this is split into two more granular components:

Working Memory: This directly corresponds to the active manipulation and holding of information for current tasks. It's like the "mental workspace" where the agent actively processes information.

Sensory Memory: This is the very brief holding of raw sensory data (visual, auditory, etc.) before it's either passed to working memory for processing or discarded. It's the initial buffer.

Long-Term Memory (LTM) in Humans ≈ Episodic Memory + Semantic Memory + Procedural Memory in AI Agents: Long-term memory in humans is the storage of information over an extended period. In AI agents, this is further categorized into:

Episodic Memory: This is the agent's "autobiographical" memory of specific events and experiences, directly analogous to human episodic memory and allows AI agents to learn from past interactions and adapt their behavior.

Semantic Memory: This is the agent's store of general knowledge about the world, including facts, concepts, and relationships similar to human semantic memory. It is essential for LLMs to understand language and for AI agents to reason about the world.

Procedural Memory: This is the memory of how to perform tasks and skills, corresponding to human procedural memory (e.g., riding a bike).

Memory in LLMs

LLMs are primarily designed for natural language processing. Their memory capabilities are largely centered around processing and generating text.

Working Memory (Context Window):

The core memory mechanism in LLMs is the "context window." This acts as a short-term memory buffer, storing the most recent tokens (words or sub-word units) from the current conversation. The size of this window is limited, meaning older parts of a long conversation can be "forgotten." The context window is essential for maintaining coherence and context within a single interaction.

Semantic Memory (Encoded in Parameters):

LLMs are trained on massive text datasets, which encodes a vast amount of semantic knowledge about the world within their parameters (weights). This pre-trained knowledge allows them to understand the meaning of words, concepts, and relationships between them. This isn't stored as explicit data within the model but rather implicitly within its structure.

Simulating Other Memory Types:

While LLMs primarily rely on working memory and pre-trained semantic knowledge, they can simulate aspects of other memory types within the confines of the context window:

Episodic Memory (Limited): By providing details of a past experience within the context window, users can guide the LLM's responses based on that specific context. However, this memory is not retained beyond the current interaction.

Procedural Memory (Through Examples): Similarly, by providing examples of a procedure within the context window, LLMs can often infer and complete the remaining steps. Again, this is not stored as a general procedure.

In summary; LLMs primarily rely on semantic memory, encoded in their vast network of learned parameters. They also utilize working memory, represented by the context window, to maintain context within a conversation. However, LLMs generally lack episodic memory, limiting their ability to recall past interactions beyond the current context. Their procedural memory is implicit in their architecture and training, enabling them to process language and generate text.

Memory in AI Agents

AI agents are designed to interact with environments, solve problems, and achieve goals. This often requires more diverse and persistent memory capabilities.

Working Memory

Like LLMs, AI agents use working memory to hold information relevant to their current task. This allows them to process immediate information and maintain short-term focus.

Semantic Memory

AI agents often use semantic memory to store general knowledge about the world. This can be implemented using knowledge graphs, databases, or embeddings in vector databases, providing the agent with a foundation for understanding its environment.

Episodic Memory

Episodic memory is crucial for agents to learn from past experiences. This involves storing specific events, including what happened, where, and when. This can be implemented using replay buffers, memory networks, or by storing embeddings of experiences in vector databases.

Procedural Memory

Procedural memory allows agents to store and execute learned skills and procedures. This can be implemented using various techniques, including reinforcement learning algorithms that learn action policies or by storing sequences of actions in memory.

Sensory Memory

Agents that interact with the physical world through sensors (cameras, microphones, etc.) use sensory memory to briefly store raw sensory input before processing it.

In summary; AI agents leverage four memory types to achieve more sophisticated and versatile behavior. They utilize external tools like databases, knowledge graphs, and vector databases to implement episodic memory, enabling them to learn from past experiences and personalize interactions. Semantic memory remains crucial for understanding the world and making informed decisions. Working memory, often enhanced by the LLM's context window, helps maintain context and track ongoing interactions. Procedural memory allows agents to perform actions and achieve goals, often implemented through rules, decision trees, or reinforcement learning.

Tools for Implementing Memory

Vector databases and embeddings are becoming increasingly important for implementing memory in AI systems, especially for semantic and episodic memory. Here is a summary of different techniques for implementing memory

Knowledge Graphs: Store semantic memory in a structured format, representing entities and relationships.

Databases (Relational and NoSQL): Store various types of memory, including episodic memory

Use Cases: Logs of past interactions and user profiles.

Vector Databases: Specifically designed to store and search for vectors, crucial for working with embeddings.

Use Cases: Long-term memory for persistent knowledge. Entity memory for personalized interactions.

Embeddings: Capture the semantic meaning of data, enabling efficient search and retrieval based on meaning.

Use Cases: Retrieval-Augmented Generation (RAG) for short-term and long-term memory. Associative memory by linking similar concepts

RetrievalAugmented Generation (RAG): Combines pre-trained models with external knowledge retrieval

Use Cases: Enhancing LLM responses with relevant context. Enabling AI agents to access domain-specific knowledge

Conclusion

Memory systems are fundamental to the operation of LLMs and AI agents, shaping their ability to process, recall, and adapt information. While LLMs rely heavily on short-term, semantic, and external memory systems, AI agents utilize a broader range of memory types to interact dynamically with their environment. Frameworks like CrewAI are pushing the boundaries of AI agent memory, enabling personalized, contextually aware, and goal-oriented interactions. Tools like vector databases, embeddings, and RAG enable the implementation of these memory types, enhancing the capabilities of both LLMs and AI agents.

References

Langchain blog: Memory for agents

Coala (Cognitive Architectures for Language Agents)Paper https://arxiv.org/pdf/2309.02427